Piper Arm Kinematics Implementation

Abstract

This chapter implements the forward kinematics (FK) and Jacobian-based inverse kinematics (IK) for the AgileX PIPER robotic arm using the Eigen linear algebra library, as well as the implementation of custom interactive markers via interactive_marker_utils.

Tags

Forward Kinematics, Jacobian-based Inverse Kinematics, RVIZ Simulation, Robotic Arm DH Parameters, Interactive Markers, AgileX PIPER

Function Demonstration

Code Repository

GitHub Link: https://github.com/agilexrobotics/Agilex-College.git

1. Preparations Before Use

Reference Videos:

1.1 Hardware Preparation

- AgileX Robotics Piper robotic arm

1.2 Software Environment Configuration

- For Piper arm driver deployment, refer to: https://github.com/agilexrobotics/piper_sdk/blob/1_0_0_beta/README(ZH).MD

- For Piper arm ROS control node deployment, refer to: https://github.com/agilexrobotics/piper_ros/blob/noetic/README.MD

- Install the Eigen linear algebra library:

sudo apt install libeigen3-dev

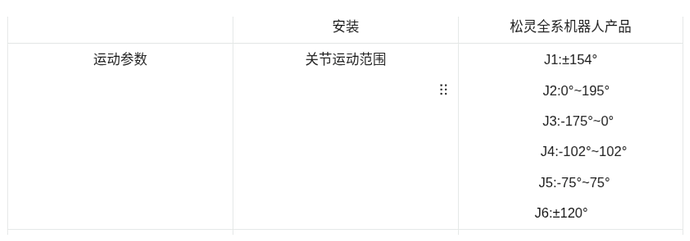

1.3 Prepare DH Parameters and Joint Limits for AgileX PIPER

The modified DH parameter table and joint limits of the PIPER arm can be found in the AgileX PIPER user manual:

2. Forward Kinematics (FK) Calculation

The FK calculation process essentially converts angle values of each joint into the pose of a specific joint of the robotic arm in 3D space. This chapter takes joint6 (the last rotary joint of the arm) as an example.

2.1 Prepare DH Parameters

- Build the FK calculation program based on the PIPER DH parameter table. From the modified DH parameter table of AgileX PIPER in Section 1.3, we obtain:

// Modified DH parameters [alpha, a, d, theta_offset]

dh_params_ = {

{0, 0, 0.123, 0}, // Joint 1

{-M_PI/2, 0, 0, -172.22/180*M_PI}, // Joint 2

{0, 0.28503, 0, -102.78/180*M_PI}, // Joint 3

{M_PI/2, -0.021984, 0.25075, 0}, // Joint 4

{-M_PI/2, 0, 0, 0}, // Joint 5

{M_PI/2, 0, 0.091, 0} // Joint 6

};

For conversion to Standard DH parameters, refer to the following rules:

Standard DH ↔ Modified DH Conversion Rules:

-

Standard DH → Modified DH:

αᵢ₋₁ (Standard) = αᵢ (Modified)

aᵢ₋₁ (Standard) = aᵢ (Modified)

dᵢ (Standard) = dᵢ (Modified)

θᵢ (Standard) = θᵢ (Modified)

-

Modified DH → Standard DH:

αᵢ (Standard) = αᵢ₊₁ (Modified)

aᵢ (Standard) = aᵢ₊₁ (Modified)

dᵢ (Standard) = dᵢ (Modified)

θᵢ (Standard) = θᵢ (Modified)

The converted Standard DH parameters are:

// Standard DH parameters [alpha, a, d, theta_offset]

dh_params_ = {

{-M_PI/2, 0, 0.123, 0}, // Joint 1

{0, 0.28503, 0, -172.22/180*M_PI}, // Joint 2

{M_PI/2, -0.021984, 0, -102.78/180*M_PI}, // Joint 3

{-M_PI/2, 0, 0.25075, 0}, // Joint 4

{M_PI/2, 0, 0, 0}, // Joint 5

{0, 0, 0.091, 0} // Joint 6

};

- Prepare DH Transformation Matrices

- Modified DH Transformation Matrix:

- Rewrite the modified DH transformation matrix using Eigen:

T << cos(theta), -sin(theta), 0, a,

sin(theta)*cos(alpha), cos(theta)*cos(alpha), -sin(alpha), -sin(alpha)*d,

sin(theta)*sin(alpha), cos(theta)*sin(alpha), cos(alpha), cos(alpha)*d,

0, 0, 0, 1;

- Standard DH Transformation Matrix:

- Rewrite the standard DH transformation matrix using Eigen:

T << cos(theta), -sin(theta)*cos(alpha), sin(theta)*sin(alpha), a*cos(theta),

sin(theta), cos(theta)*cos(alpha), -cos(theta)*sin(alpha), a*sin(theta),

0, sin(alpha), cos(alpha), d,

0, 0, 0, 1;

- Implement the core function

computeFK() for FK calculation. See the complete code in the repository: https://github.com/agilexrobotics/Agilex-College.git

Eigen::Matrix4d computeFK(const std::vector<double>& joint_values) {

// Check if the number of input joint values is sufficient (at least 6)

if (joint_values.size() < 6) {

throw std::runtime_error("Piper arm requires at least 6 joint values for FK");

}

// Initialize identity matrix as the initial transformation

Eigen::Matrix4d T = Eigen::Matrix4d::Identity();

// For each joint:

// Calculate actual joint angle = input value + offset

// Get fixed parameter d

// Calculate the transformation matrix of the current joint and accumulate to the total transformation

for (size_t i = 0; i < 6; ++i) {

double theta = joint_values[i] + dh_params_[i][3]; // θ = joint_value + θ_offset

double d = dh_params_[i][2]; // d = fixed d value (for rotary joints)

T *= computeTransform(

dh_params_[i][0], // alpha

dh_params_[i][1], // a

d, // d

theta // theta

);

}

// Return the final transformation matrix

return T;

}

2.2 Verify FK Calculation Accuracy

- Launch the FK verification program:

ros2 launch piper_kinematics test_fk.launch.py

- Launch the RVIZ simulation program, enable TF tree display, and check if the pose of

link6_from_fk (the arm end-effector calculated by FK) coincides with the original link6 (calculated by joint_state_publisher):

ros2 launch piper_description display_piper_with_joint_state_pub_gui.launch.py

High coincidence is observed, and the error between link6_from_fk and link6 is basically within four decimal places.

3. Inverse Kinematics (IK) Calculation

The IK calculation process essentially determines the position of each joint of the robotic arm required to move the arm’s end-effector to a given target point.

3.1 Confirm Joint Limits

- Joint limits of the PIPER arm must be defined to ensure the IK solution path does not exceed physical constraints (preventing arm damage or safety hazards).

- From Section 1.3, the joint limits of the PIPER arm are:

- The joint limit matrix is defined as:

std::vector<std::pair<double, double>> limits = {

{-154/180*M_PI, 154/180*M_PI}, // Joint 1

{0, 195/180*M_PI}, // Joint 2

{-175/180*M_PI, 0}, // Joint 3

{-102/180*M_PI, 102/180*M_PI}, // Joint 4

{-75/180*M_PI, 75/180*M_PI}, // Joint 5

{-120/180*M_PI, 120/180*M_PI} // Joint 6

};

3.2 Step-by-Step Implementation of Jacobian Matrix Method for IK

Solution Process:

- Calculate Error e:

Difference between current pose and target pose (6-dimensional vector: 3 for position + 3 for orientation).

- Is Error e below tolerance?

- Yes: Return current θ as the solution.

- No: Proceed to iterative optimization.

- Calculate Jacobian Matrix J: 6×6 matrix.

- Calculate Damped Pseudoinverse:

J⁺ = Jᵀ(JJᵀ + λ²I)⁻¹

λ is the damping coefficient (avoids numerical instability in singular configurations).

5. Calculate Joint Angle Increment:

Δθ = J⁺e

Adjust joint angles using error e and pseudoinverse.

6. Update Joint Angles:

θ = θ + Δθ

Apply adjustment to current joint angles.

7. Apply Joint Limits.

8. Normalize Joint Angles.

9. Reach Maximum Iterations?

- No: Return to Step 2 for further iteration.

- Yes: Throw non-convergence error.

Core Function computeIK():

std::vector<double> computeIK(const std::vector<double>& initial_guess,

const Eigen::Matrix4d& target_pose,

bool verbose = false,

Eigen::VectorXd* final_error = nullptr) {

// Initialize with initial guess pose

if (initial_guess.size() < 6) {

throw std::runtime_error("Initial guess must have at least 6 joint values");

}

std::vector<double> joint_values = initial_guess;

Eigen::Matrix4d current_pose;

Eigen::VectorXd error(6);

bool success = false;

// Start iterative calculation

for (int iter = 0; iter < max_iterations_; ++iter) {

// Calculate FK for initial state to get position and orientation

current_pose = fk_.computeFK(joint_values);

// Calculate error between initial state and target pose

error = computePoseError(current_pose, target_pose);

if (verbose) {

std::cout << "Iteration " << iter << ": error norm = " << error.norm()

<< " (pos: " << error.head<3>().norm()

<< ", orient: " << error.tail<3>().norm() << ")\n";

}

// Check if error is below tolerance (separate for position and orientation)

if (error.head<3>().norm() < position_tolerance_ &&

error.tail<3>().norm() < orientation_tolerance_) {

success = true;

break;

}

// Calculate Jacobian matrix (analytical by default)

Eigen::MatrixXd J = use_analytical_jacobian_ ?

computeAnalyticalJacobian(joint_values, current_pose) :

computeNumericalJacobian(joint_values);

// Use Levenberg-Marquardt (damped least squares)

// Δθ = Jᵀ(JJᵀ + λ²I)⁻¹e

// θ_new = θ + Δθ

Eigen::MatrixXd Jt = J.transpose();

Eigen::MatrixXd JJt = J * Jt;

// lambda_: damping coefficient (default 0.1) to avoid numerical instability in singular configurations

JJt.diagonal().array() += lambda_ * lambda_;

Eigen::VectorXd delta_theta = Jt * JJt.ldlt().solve(error);

// Update joint angles

for (int i = 0; i < 6; ++i) {

// Apply adjustment to current joint angle

double new_value = joint_values[i] + delta_theta(i);

// Ensure updated θ is within physical joint limits

joint_values[i] = std::clamp(new_value, joint_limits_[i].first, joint_limits_[i].second);

}

// Normalize joint angles to [-π, π] (avoid unnecessary multi-turn rotation)

normalizeJointAngles(joint_values);

}

// Throw exception if no solution is found within max iterations (100)

if (!success) {

throw std::runtime_error("IK did not converge within maximum iterations");

}

// Calculate final error (if required)

if (final_error != nullptr) {

current_pose = fk_.computeFK(joint_values);

*final_error = computePoseError(current_pose, target_pose);

}

return joint_values;

}

3.3 Publish 3D Target Points for the Arm Using Interactive Markers

- Install ROS2 dependency packages:

sudo apt install ros-${ROS_DISTRO}-interactive-markers ros-${ROS_DISTRO}-tf2-ros

- Launch

interactive_marker_utils to publish 3D target points:

ros2 launch interactive_marker_utils marker.launch.py

- Launch RVIZ2 to observe the marker:

- Drag the marker and use

ros2 topic echo to verify if the published target point updates:

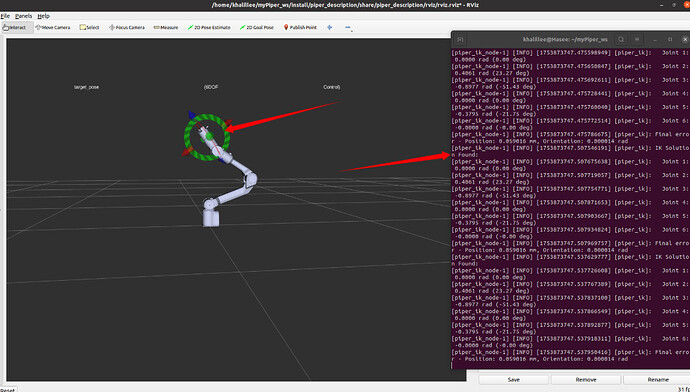

3.4 Verify IK Correctness in RVIZ via Interactive Markers

- Launch the AgileX PIPER RVIZ simulation demo (the model will not display correctly without

joint_state_publisher):

ros2 launch piper_description display_piper.launch.py

- Launch the IK node and

interactive_marker node (in the same launch file). The arm will display correctly after successful launch:

ros2 launch piper_kinematics piper_ik.launch.py

- Control the arm for IK calculation using

interactive_marker:

- Drag the

interactive_marker to see the IK solver calculate joint angles in real time:

- If the

interactive_marker is dragged to an unsolvable position, an exception will be thrown:

4. Verify IK on the Physical PIPER Arm

- First, launch the script for CAN communication with the PIPER arm:

cd piper_ros

./find_all_can_port.sh

./can_activate.sh

- Launch the physical PIPER control node:

ros2 launch piper my_start_single_piper_rviz.launch.py

- Launch the IK node and

interactive_marker node (in the same launch file). The arm will move to the HOME position:

ros2 launch piper_kinematics piper_ik.launch.py

- Drag the

interactive_marker and observe the movement of the physical PIPER arm.

1 post - 1 participant

Read full topic